Table of Contents

1. Introduction

In an age where digital communication is paramount, understanding how computers process human language is crucial. At the heart of this capability is the binary system—comprising just two digits, 0 and 1. This blog post explores how computers transform human language into binary, process it, and then convert it back into readable text.

2. Binary Representation of Text

2.1 ASCII Encoding

ASCII (American Standard Code for Information Interchange) is one of the simplest ways to encode text into binary. Each character is assigned a unique 7-bit binary code. For example, the letter “A” is represented as 01000001. This system allows computers to convert letters, numbers, and symbols into a format they can understand.

2.2 UTF-8 Encoding

UTF-8 is a more versatile encoding system that supports a broader range of characters, including those from various languages. It uses one to four bytes for each character, allowing for a rich representation of text. This flexibility is essential for global communication in the digital age.

3. Storing and Processing Data

3.1 Data Structures

Once text is converted into binary, it can be stored and processed in various data structures.

3.1.1 Strings

A string is a sequence of characters stored as binary data. This representation allows computers to manipulate text efficiently, whether for display or analysis.

3.1.2 Arrays

Arrays organize binary data into structured formats, making it easy to access and modify specific elements. This organization is critical for efficient data handling in programming.

4. Natural Language Processing (NLP)

Natural Language Processing (NLP) is the field that focuses on how computers can understand and respond to human language.

4.1 Tokenization

The first step in NLP is tokenization, where the binary data is converted back into text and then split into smaller units, such as words or phrases.

4.2 Syntax and Parsing

Once tokenized, computers analyze sentence structure. This parsing process identifies grammatical relationships, essential for comprehending complex sentences.

4.3 Semantic Analysis

After syntax analysis, semantic analysis helps computers interpret the meaning behind the text. This includes understanding context, idioms, and nuances in language.

5. Machine Learning and Deep Learning

Machine learning plays a vital role in enhancing a computer’s ability to understand language.

5.1 Feature Extraction

During training, meaningful features are extracted from the binary data, enabling models to learn patterns associated with language.

5.2 Training Models

Models are trained using large datasets encoded in binary, allowing them to classify, generate, or translate language effectively.

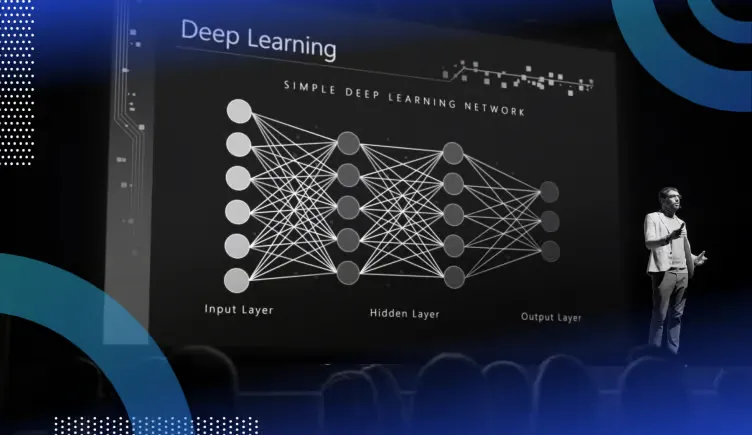

5.3 Neural Networks and Transformers

Modern NLP relies on neural networks, particularly transformers, which excel in understanding context and generating coherent text.

6. Converting Outputs Back to Human Language

6.1 Decoding Binary to Text

After processing, computers convert binary outputs back into readable text using the same encoding systems.

6.2 Output Generation

Finally, the generated text is displayed to users, whether in response to queries or as translated content.

7. Challenges and Limitations

Despite advancements, challenges persist in language processing.

7.1 Ambiguity in Language

Human language is inherently ambiguous. Words can have multiple meanings depending on context, posing a challenge for machines.

7.2 Nuances and Cultural Variations

Sarcasm, slang, and cultural references can complicate understanding, making it difficult for computers to interpret language accurately.

8. Conclusion

The ability of computers to understand human language through binary representation is a fascinating interplay of encoding, processing, and decoding. As technology continues to evolve, so too will the sophistication of language processing, paving the way for more seamless interactions between humans and machines. The future of NLP holds promise for deeper understanding and more intuitive communication in the digital world.

This post highlights the journey from human language to binary digits and back, illustrating how computers make sense of the words we use every day.